We often associate big data with research problems that are important to humanity as a whole - problems like human evolution, energy sources and cancer. To process big data, we often think that one needs big iron like computer nodes, scale-out storage and parallel systems to compute the massive amounts of data.

Most people think of reams upon reams of contiguous terabytes when they think of big data, with seemingly endless streams of ones and zeros worked on by a myriad of computer nodes for causes necessary for human progress. This way of considering big data as monolithic elements is not always correct. Problems that require big data processing can also be created by regular individuals. Put together, the small, independent pieces of data can create real big problems.

This is how some big data is hiding right in front of our eyes. The society we live in today is obsessed with communication, and the communication that is created daily is moved around the world at lightning speed without us taking cognizance of the data created by our daily interactions. We text, tweet, Instagram and e-mail daily, adding to the larger pool of small personalized data that makes up huge amounts of data. For example, businesses today deal with:

- 76B1– the average amount of legitimate e-mails businesses receive on a daily basis

- 75B2 – text message exchanges between businesses and customers per day

- 0.5B3 – the total daily amount of twitter data, a significant portion of which deals directly with business matters

At the core

The dual challenge for businesses is to comprehend the unstructured data and then respond appropriately in time. Simply put, this could be an operational cost that could raise your business’s overheads. The trick is learning how to satisfy the customer while keeping the bottom line in mind.

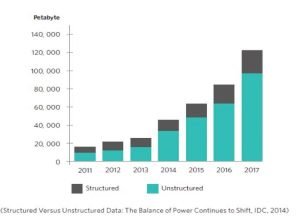

The chart below shows how most of the data that affects business is unstructured and has an exponential growth pattern:4

Since the flow of information is not set to abate anytime soon, it is important for businesses to use different strategies to redirect that flow and process information more quickly. Enterprise Content Management (ECM) within businesses can make use of Artificial Intelligence (AI) tools in order to process the flow of information faster. The AI tool or tools which can be put in place can execute a number of different actions including sending automatic responses to queries, channeling queries to the relevant person or department or customer service agent, making suggestions on how the query should be handled or even initiating a business process workflow.

Traditional big data and communication big data are different in many ways, one of the most important of which is what kind of hardware is used to process data. Traditional big data uses high performance computing stacks, whereas the communication big data typically uses two to four servers. The number of servers used for the latter depends on how many concurrent processing actions are being done.

Sure, the advantages of processing small data have been recorded and commented on extensively. Many people talk about the capturing, processing, archiving and retrieval of data. But for me, that is not the real gem of small data processing. The real advantage is comprehending the message behind the data, making that a learning experience and then taking relevant action with that knowledge. Currently though, data capturing rests in the hands of error prone human resources and that is where the core of poor customer service lies.

1 The Radicati Group Email Statistics Report, 2015 – 2019

2 Derived from http://www.grabstats.com/statmain.aspx?StatID=402

3 http://www.internetlivestats.com/twitter-statistics/

4 International Data Corporation (IDC)